There’s a new ChatGPT update that multiplies what you can do with the chatbot: the AI can now analyze images, thanks to ChatGPT image input.

This isn’t as simple as it sounds. It can identify what’s in an image, sure, but it can also read text and math from an image, search or find out about the things in an image, and give feedback about the image. That’s a lot of possibility in a single feature.

Here's how to get it working right.

How to upload images in ChatGPT 4

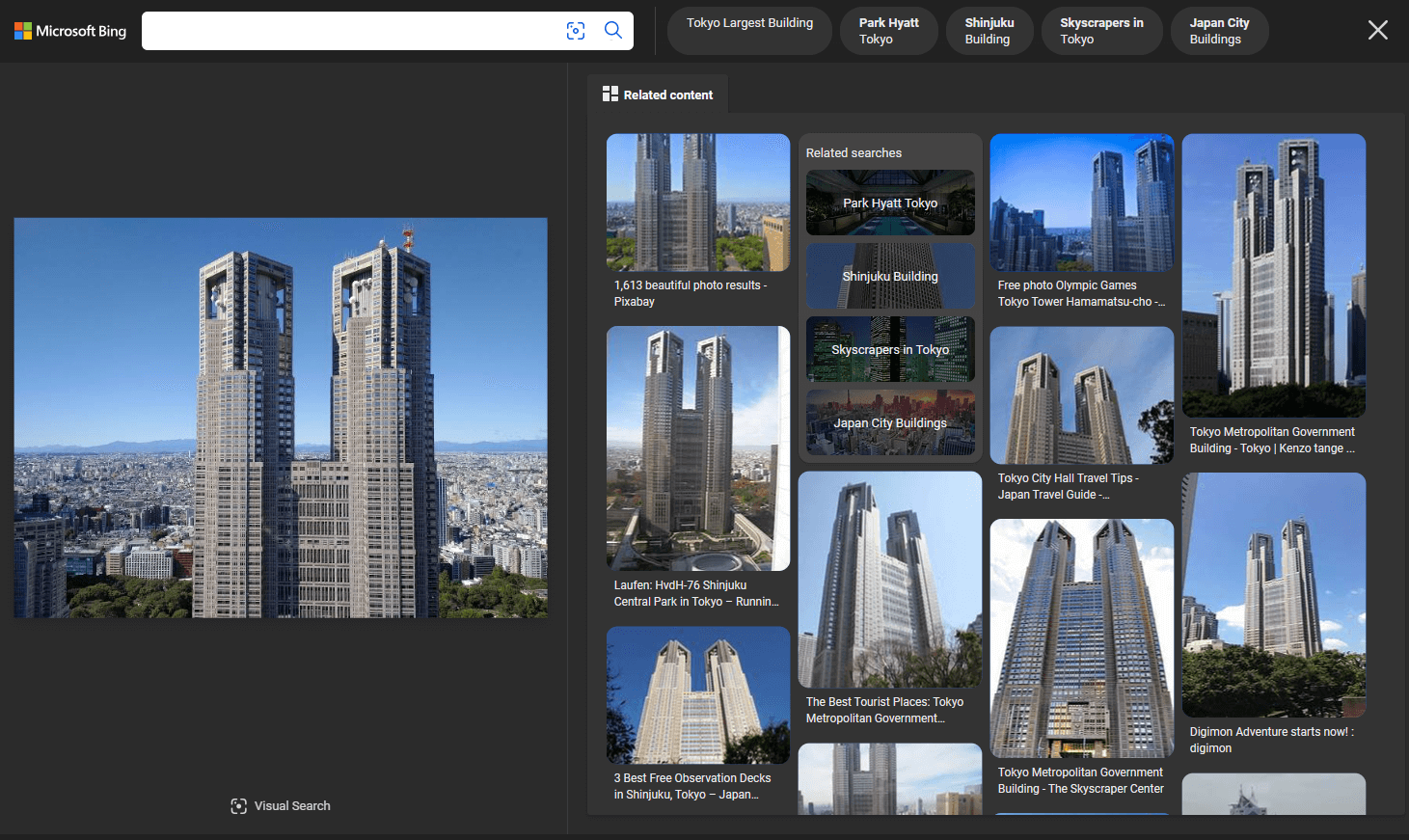

The process for inputting an image for ChatGPT to analyze is straightforward. Note that image inputs currently work only with GPT-4 on ChatGPT Plus and ChatGPT Enterprise. Once you’re set, just navigate to the chat box (on desktop or mobile) and click the paperclip icon.

|

Next, choose the file on your device, then add a prompt—anything from "Describe this image" to "What color shoes should I wear with this outfit?" ChatGPT can accept PNG, JPEG, or non-animated GIF files up to 20MB.

Learn more: Using ChatGPT data analysis to interpret charts & diagrams

What is ChatGPT image recognition?

ChatGPT image input certainly isn’t the first AI image recognition program. In fact, they have a fairly long history. In 2010 (basically the stone age in AI time scales), there was Google Goggles, an image recognition mobile app. Despite being a relic, it had some decidedly impressive features: the ability to recognize and translate text, and find similar images using a reverse image search. However, it’s not meant for interpreting specialized or high-stakes images (like medical scans), so keep that in mind when you’re uploading content.

OpenAI's latest offering has features reminiscent of Goggles, but with a unique approach. The difference is how ChatGPT now interprets the actual contents of the image, rather than searching the web and comparing it to known images. Specifically, ChatGPT generates a description of the image and uses that description in its search.

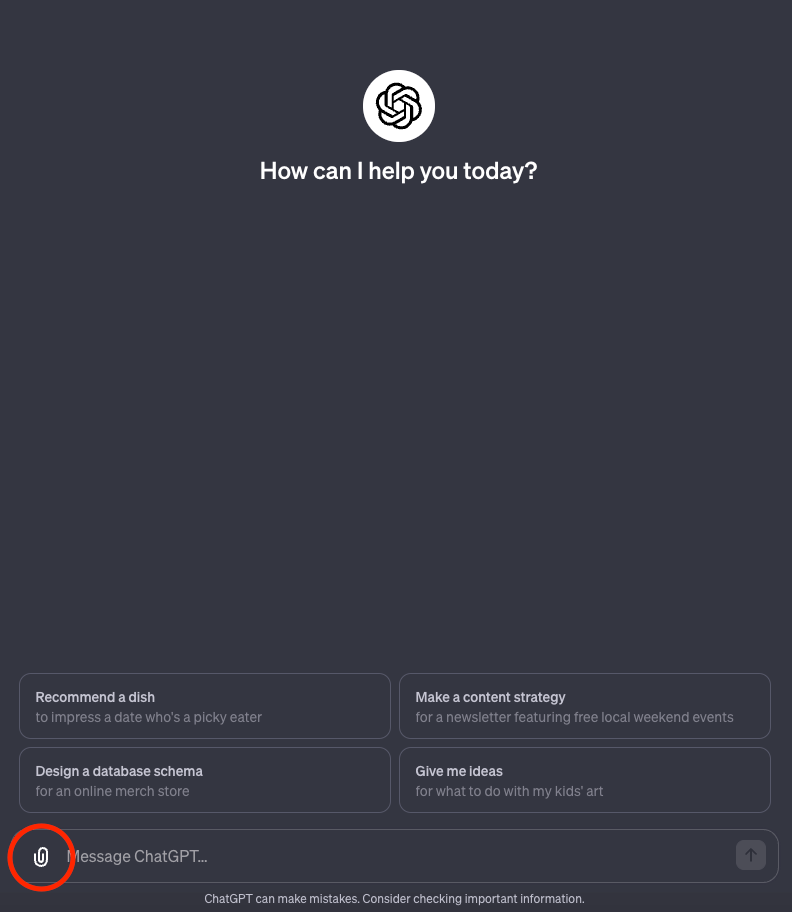

And it’s pretty accurate. When I first asked it to identify a lunch, it easily figured out I was eating clam chowder in a bread bowl.

|

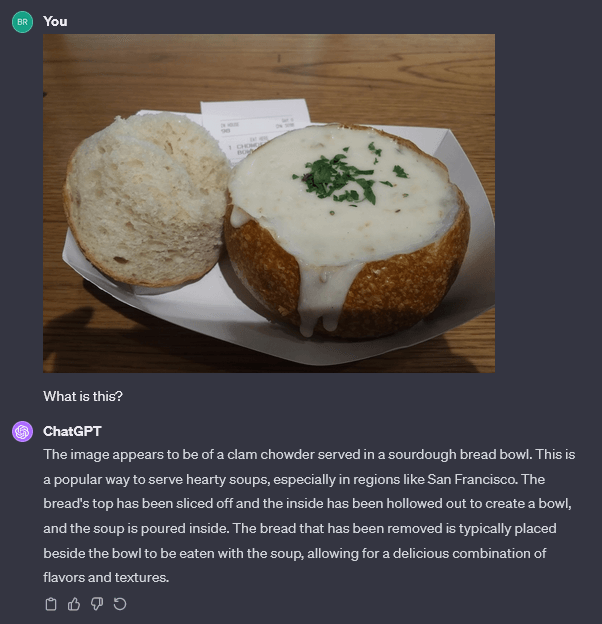

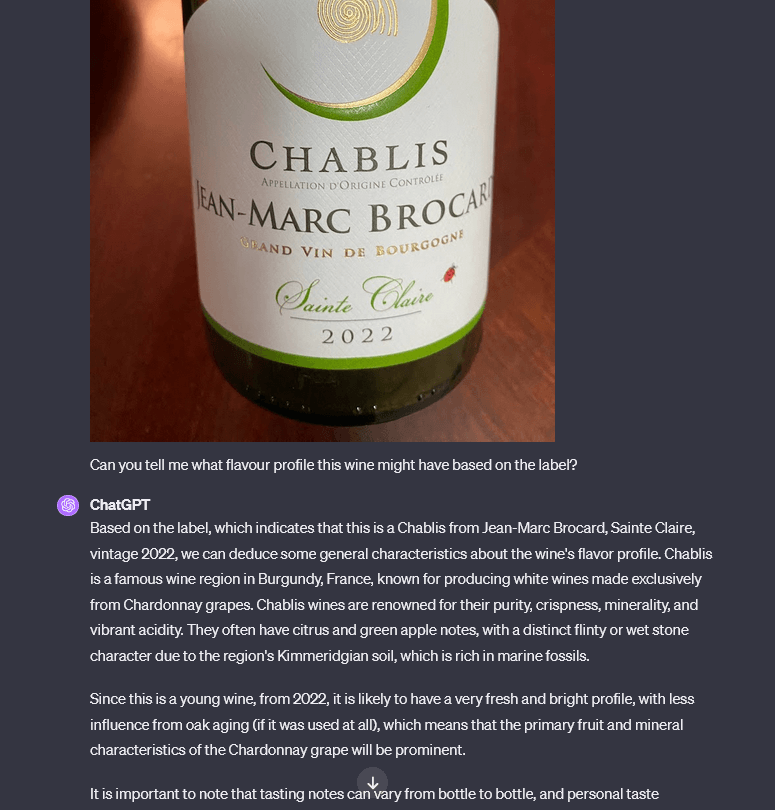

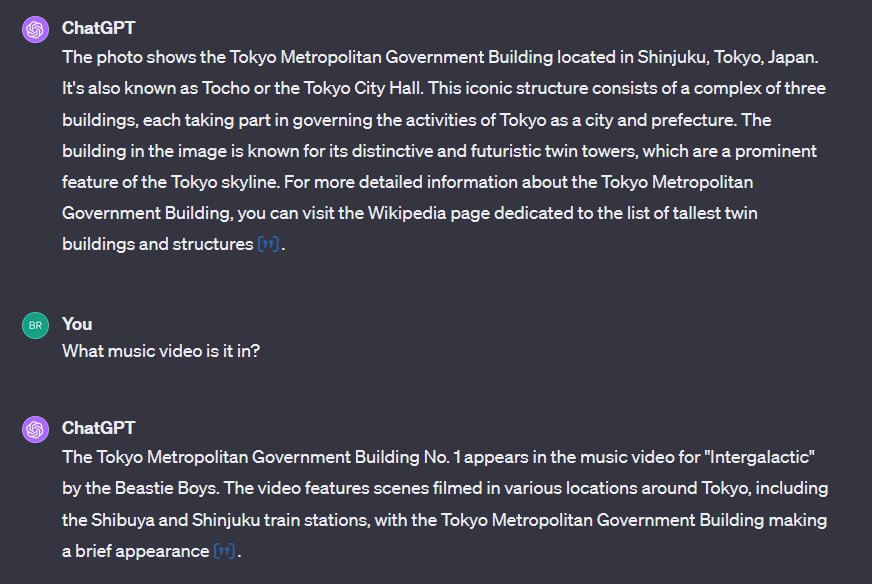

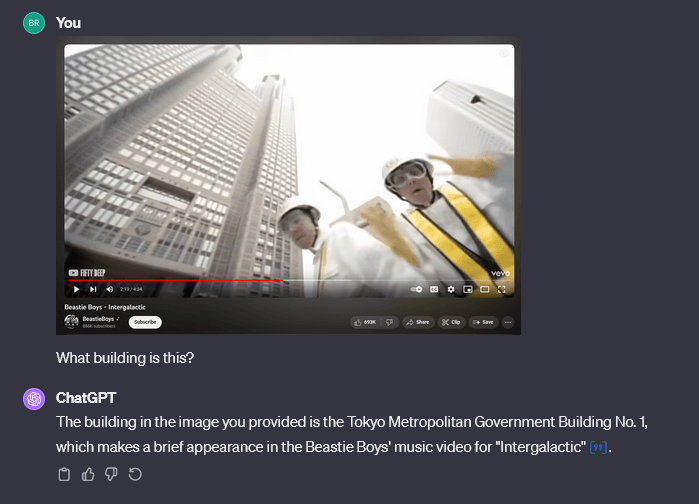

But in my next test, I asked it to identify the Tokyo Metropolitan Government Building from a photo I took. The tool’s reliance on descriptive text led to mixed results—it cycled through various search terms to describe the building, including ‘twin towers with spherical structures on top.’

On my first try, it eventually found the correct building but referenced an irrelevant Wikipedia page. When I tried again, it got the wrong place entirely (The Tokyo Towers). At least it got the city right. Meanwhile, a reverse image search located it immediately.

|

As with any emerging technology, expect continuous enhancements. The current version may not always be spot-on with citations or identifications, but it's evolving. In the meantime, be sure to double check ChatGPT’s references.

Tip: This is where multi-agent prompting—that is, using multiple AI tools for a larger task—comes in handy. Where ChatGPT image input falls short, you can take advantage of Lens in Google Photos and Bard. Bing also has a reverse image search feature.

ChatGPT text and math recognition

When it comes to text recognition, ChatGPT shows impressive results, particularly with clear, neatly handwritten text or printed words. Just note that its accuracy can dip with non-Latin alphabets, so it might struggle with scripts like Japanese or Korean.

It's a mixed bag with translations, though. In my tests, ChatGPT's reading of handwritten French was passable, but it amusingly mistook a bottle of black rice vinegar for premium sake when interpreting Japanese—you don't want to make that mistake when you're bringing a gift for a dinner party! Meanwhile, when I used Google Lens, it accurately translated a Japanese sign that ChatGPT told me was "too blurry" to read. (Another perfect example of how using the multi-agent approach lets you play to each of the tools’ strengths.)

Here's a cool thing though: ChatGPT can recognize written math formulas, which is way easier than typing them out. But solving them? Not its strong suit. It tries, but don't bet your homework on it—after all, it's a prediction engine that's just trying to figure out what word comes next. When I put it to the test on my old macroeconomics assignments it gave wrong but plausible answers 4 out of 4 times.

Regardless, the ability to input formulas is one big advantage over Lens, even if you have to do most of the heavy lifting from there.

Tip: There are some ChatGPT plugins specifically for math, so it feels like a win-win to use them together.

ChatGPT image search

Now that ChatGPT uses Bing to search the web, you've got options to retrieve information: either using ChatGPT's internal "knowledge," or using external knowledge from the web. The default for ChatGPT 4 is to dynamically choose the best model, so it decides for you whether it should search or not.

I found that if you ask about a specific element in an image, it tends to search, but if you ask an interpretive question about the contents of the image, it usually will attempt to answer based on its internal knowledge.

But rather than relying on its decisions, a better habit to get into is asking it explicitly to use search—or not.

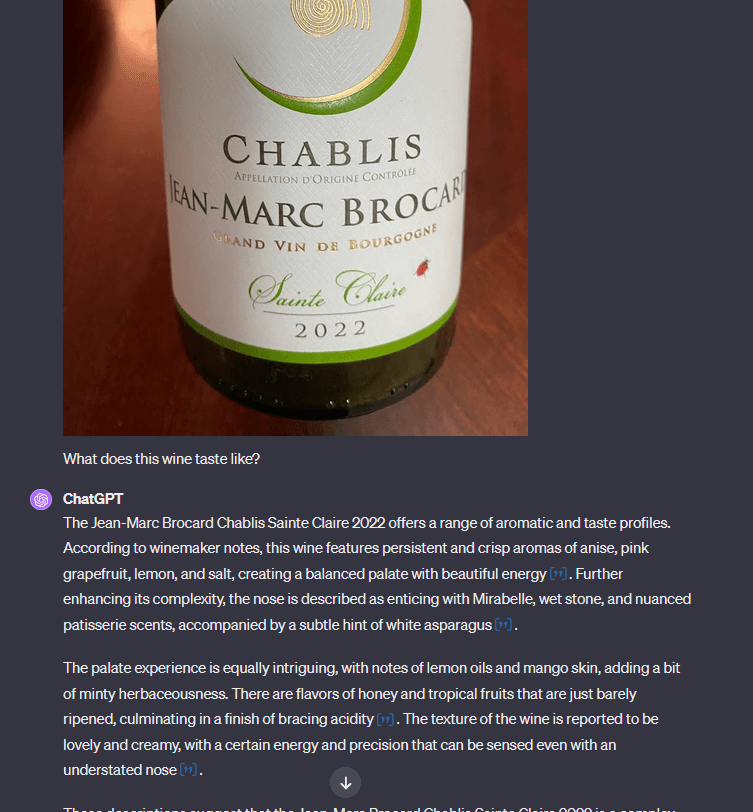

|

|

When I asked it to give me tasting notes on a certain wine from a picture of the bottle's label, it was able to seek out the exact wine by reading the text and searching for it through Bing. Meanwhile, when it used its internal knowledge, it gave me a description of the typical flavor profile of Chablis instead.

The ability to search is great when Bing search finds a reputable site, but awful when it lands on a high-ranking site that’s less authoritative. My wine search surfaced information from Wine.com from the winemaker themselves along with professional descriptions of the wine, so it was pretty solid. But in other tests, I've seen it end up on a less reliable site and retrieve that information instead, which is much less useful.

For now, you'll have to double check ChatGPT’s work by doing research on your own to make sure it isn't digging up false information or information from questionable sources.

Tip: Monitor as it searches to see what it is looking for and on what sites. You can also explicitly ask it to tell you what it searched for.

ChatGPT image analysis in depth

For me, this is the real meat of what ChatGPT image input can do: You can analyze the image to see whether or not it fits with a theme, or whether it resonates with a certain persona.

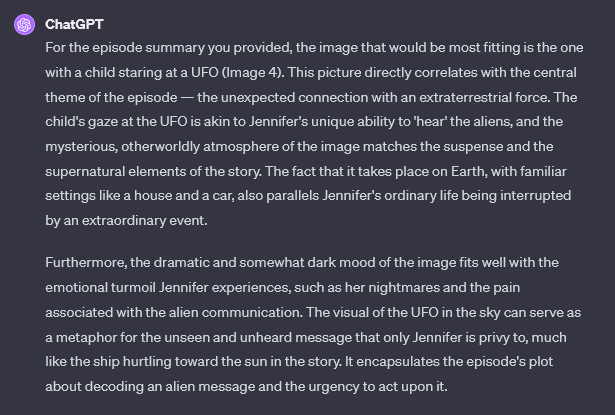

To test it, I gave ChatGPT six possible images for a fictional sci-fi/paranormal-themed podcast and asked which would fit with the overall theme. It rated all six, dropping one as a bad fit—an assessment I agreed with.

But how detailed would it get? Turns out, pretty detailed. I gave it a synopsis of an Outer Limits episode and asked which one was the best fit based on the episode description.

|

When I asked how I could improve the image to better fit the theme, it gave some pretty interesting ideas, specifically referencing various parts of the actual episode. A good illustrator could have taken these suggestions and altered the image based on those suggestions.

Frequently asked questions

Can ChatGPT take images as input?

Yes. ChatGPT’s GPT-4 model can read and interpret images you upload. Click the paperclip icon (on desktop or mobile) to add a file, then describe what you want it to do—like identify objects or read text.

Which file types work with ChatGPT image input?

ChatGPT supports JPEG, PNG, and non-animated GIF files up to 20MB. If your upload fails, check your file size or format.

Why can’t I upload pictures to ChatGPT?

You might be using the wrong file type or a file that’s too large. Make sure you’re on GPT-4, and your file is JPEG, PNG, or a non-animated GIF under 20MB. If it still doesn’t work, try refreshing your browser.

Can ChatGPT extract text from images?

Yes. It can usually read typed or clearly handwritten text. But it may struggle with sloppy handwriting or low-resolution images, so double-check the results.

Conclusion

This is yet another way ChatGPT is becoming multimodal, with its newfound ability to see, hear, and speak. I believe that multimodal is going to be one of the most important strains of AI. Even though the tools are brand new, thinking in terms of multiple types of inputs is a skill that everyone should be starting to develop.

Not to mention, ChatGPT now has all the power to exceed my capabilities in obscure music video trivia. Dang it!

|

|

%20(1).JPG)