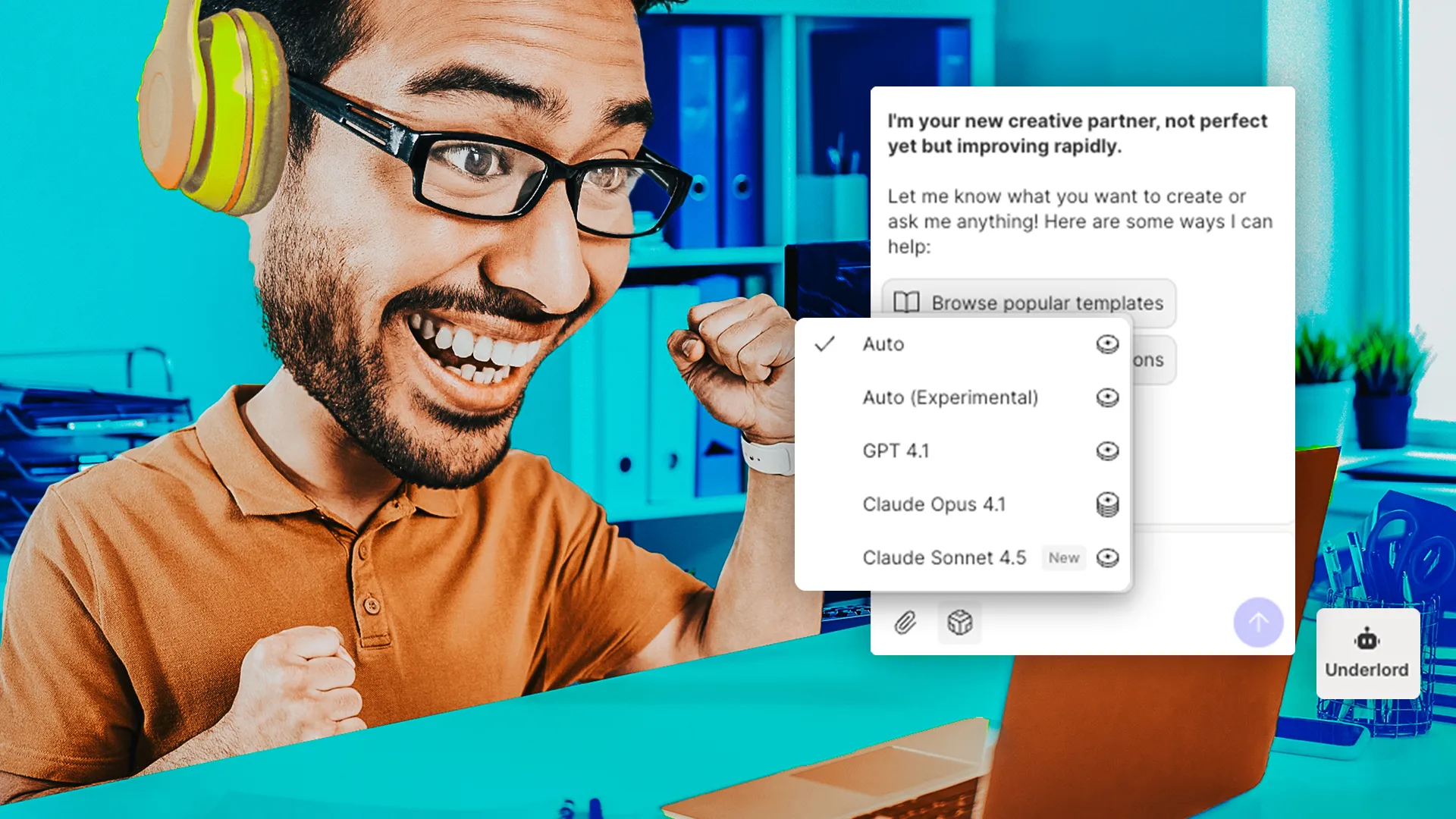

If you've used Underlord lately, you might have noticed something new: a model picker. And in that picker, a shiny new option called Claude Sonnet 4.5.

This wasn't some grand master plan. It was more like: we'd been wanting to add a model picker for ages, Claude released a new model that looked interesting, and suddenly we had an excuse to actually ship the thing.

"We've wanted to add it for a very long time," says Ajay Arasanipalai, one of the engineers on the team that shipped this. "But never got around to shipping it, and Sonnet 4.5 was the catalyst."

What we were doing before (and why it wasn't enough)

Here's the thing about Underlord: it's not just one AI model doing everything. It's a mix of models from different providers, each handling different parts of the agent. Until now, we picked what we thought was a reasonable default configuration—something that balanced speed, cost, quality, and the complexity of actually building and testing it.

"With the model picker, we're letting you choose a point in that tradeoff space for yourself," Ajay said. "When you do, we swap every LLM request in the agent to use your chosen model."

The canonical configuration we shipped with is still the most well-tested. Which means if you start experimenting with other models, you might hit some rough edges. We're being honest about that.

The boring (but important) challenges

You might expect that adding a new AI model to a video editing tool would involve some complex technical wizardry. Mostly it didn't.

"Honestly the 'challenges' were quite boring," Ajay said. "Most of it was around setting up the feature flags right. But that's perhaps a testament to our LLM infrastructure being provider/model agnostic already."

The real challenge—and this is ongoing—is evaluation. How do you know if one model is actually better than another for video editing?

"The single most important one, and this is a recurring theme, is that evaluating and comparing models is extremely hard," Ajay said. "Not only are video editing queries to our agent often subjective, but the final perception of quality has a lot to do with the final state your project ends up in—which is downstream of the side effects of tool calls, not just what the model outputs."

When the model is editing 45 minutes of footage, it takes a while for a human to review. Add in the randomness of AI, and isolating whether improvements come from the model itself becomes nearly impossible.

What this actually means for you

More choice. More flexibility. A new fast model that might work really well for your particular use case, or might not.

"A big part of launching the model picker is not just giving users more choice, but also learning for ourselves if and how people use different models differently," Ajay said.

In the future, we're planning to expose more granular control—maybe letting you pick different models for different parts of the agent instead of swapping everything at once. But for now, this is what we've got.

Try it out. See what you discover. Let us know if Claude Sonnet 4.5 does something surprisingly good (or surprisingly bad) with your footage. We're learning too.