OpenAI just opened up a bunch of new ways to interact with ChatGPT. Now, the AI chatbot can not only read what you write, but see the pictures you upload and hear the words you say—and even speak back.

In my view, this isn’t just about new features; it's a glimpse into the future of AI. We're finally getting access to what are called multimodal systems, systems that blend different types of understanding together. It's like having a conversation with a friend who doesn't just listen but also observes and interprets what they see—so if you pull out your phone to share a picture of your cat, they can see how cute Sparky is too.

The tools aren't fully multimodal yet. For now, they're more like translators between different data forms. But even still, these capabilities are the first step towards a whole new slate of systems that will mesh various kinds of understanding. It's worthwhile to get used to thinking multimodally and mastering this different way of interacting with AI now so you'll be ready when even more complex multimodal systems come.

|

Visual ChatGPT—how to make ChatGPT see

With ChatGPT's new image analysis features, instead of trying to find the perfect words to explain images to ChatGPT, now you can just show them.

If you've got a picture already, upload it to the prompt textbox using the paper clip icon (desktop) or the plus sign (mobile). Alternatively, if you have it copied to the clipboard, you can paste it right in.

Taking a live pic with your phone works too. When adding an image live, you can circle parts of it to guide ChatGPT's focus. For instance, you might circle something mysterious and ask "what's this?" You're not limited to one image; upload a bunch if you need to.

This feature has some of the most exciting possibilities. Don’t know what something is? Ask ChatGPT to identify it. For instance, if you’re scratching your head about which tool in your set is the Allen wrench, just show ChatGPT a picture — it’ll help you figure it out (and even suggest how to use it). Need to describe an image in words? It can give you a pretty good starting point. Wrestling with a confusing graph? ChatGPT can act as your personal data analyst.

|

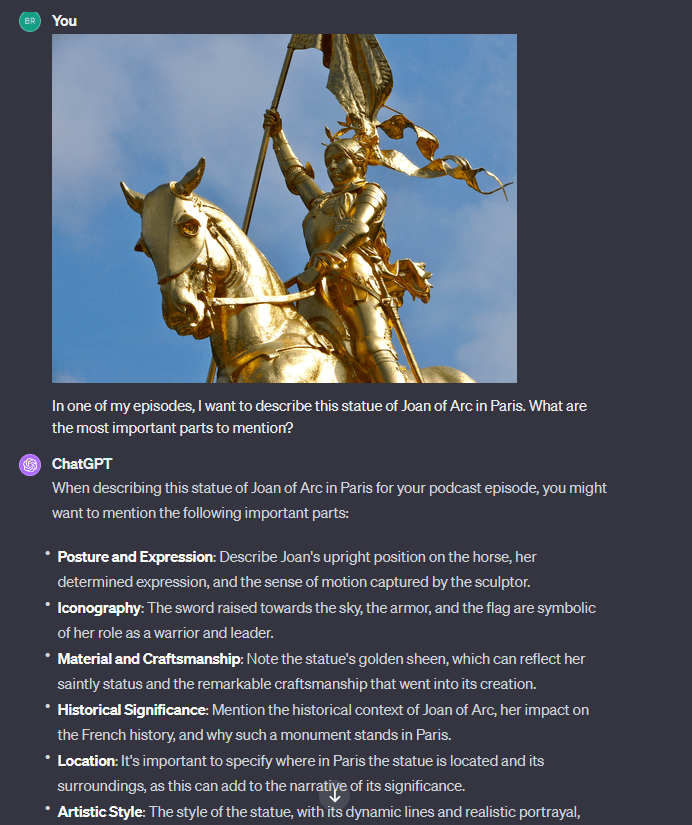

But my favorite feature? Thematic analysis. Basically, it can analyze an image to see if it would fit with a theme. It’s like having a second set of eyes with a flair for design.

Use it to pick between options of images that could accompany a social media post, thumbnail, or on a webpage. My testing showed a pretty good understanding of both the content and the tone of the image.

But it's even more powerful when you set it up to simulate a certain persona. Then you can ask it which image resonates the most with your target audience or ideal client.

You're not alone if you think this sounds complicated. This function does take a bit of time to get the hang of. We’ll be writing about these features in detail soon.

🤖 Tip: ChatGPT can also read text and math formulas from an image.

Voice control for ChatGPT—how to make ChatGPT hear

The "Hear" feature lets you use voice input for your prompts rather than using the keyboard—that is, you can now talk to ChatGPT. You'll need your phone ready, though: the "hear" feature is only available on iOS and Android for the moment. To use this feature, hit the audio icon to the right of the prompt box.

At the moment, the voice recognition feature works like a transcription tool: it just turns your speech into text in the prompt window. It uses OpenAI’s Whisper API.

The benefits of this are familiar for anyone who uses speech-to-text for composing texts and emails on their phone: you talk a lot faster than you can type, you don’t have to fumble with keyboards, and if you're somewhere cold (like I am), you minimize the amount of time you have to take your gloves off in the middle of your walk when you just have to know what juice Snoop drinks with his gin (probably orange, if you're wondering).

My favorite way to use ChatGPT’s speech recognition is as a data input. You can read it an ingredient list or get it to transcribe a few lines of a book as part of your prompt, or let it listen to video or podcast audio directly (this doesn’t work as well, in my tests).

This feature has its limitations; it’s not great at deciphering accents or picking up on musical tones, for example. It’s hardly a voice assistant. The best way to use it is for quick-hit short prompts.

Learn more: Using ChatGPT data analysis to interpret charts & diagrams

How to use ChatGPT’s text-to-speech function to make it speak

Although ChatGPT can "hear" you when you record the prompt, it doesn't yet feel like a true back and forth conversation. If you’re itching for a human-AI chat that offers two-way talk, ChatGPT’s new "Speak" feature is for you. Want a bedtime story? Just say so. It’ll happily read you one in its AI voice — you can even pick which voice if you’re feeling picky about bedtime vibes.

To have a conversation, click on the headphones icon beside the prompt textbox. From there, you can choose between five different voices (You can always change your mind later.) This starts a voice/audio conversation with ChatGPT.

The chat style is the same as you know and love from regular ChatGPT, and you can streamline it using custom instructions or advanced prompts. The AI voice then reads you ChatGPT's output.

As with the "hear" features, the feature records and then transcribes your prompt. Patience is key here, as the transcription isn't instant—it takes a few minutes to process. If you want to re-record your prompt, you can tap to cancel and give it another go. Don't worry about having to retain everything on one listen, the transcribed chat is all available in your message history.

At first, I felt put on the spot to keep coming up with prompts as a back and forth conversation with ChatGPT. But I found that I didn’t need to be perfect—it easily overcomes pronunciation problems, transcription errors, and user mistakes.

For instance, when I asked about OSFI (usually pronounced "Oss-fee"), it made a pretty good guess about what I said (OSPI). And despite this transcription error, ChatGPT searched for the information it thought I wanted, figured out the transcription had an error, then searched for the correct thing, found it, and then gave me the right answer, complete with citations. Not bad.

|

A heads-up: if ChatGPT needs to browse the web, it takes a lot longer and it won't signal that it's in the midst of browsing. When it speaks the answer, it also doesn't give you an indication of whether there’s a citation or not. To check for citations, you'll need to switch back to text chat.

Remember, like regular ChatGPT chats, it tracks past conversations, but might veer off track if you don't reference previous points.

It works well as an information gatherer, but my favorite way to use it is to set ChatGPT up as a persona. This is the perfect place to set up a persona to have a conversation back and forth.

🤖 Tip: Try to stick to short prompts, as it sometimes cuts off or cuts out pieces in the middle of your recording.

Image generation for ChatGPT—how to make ChatGPT draw

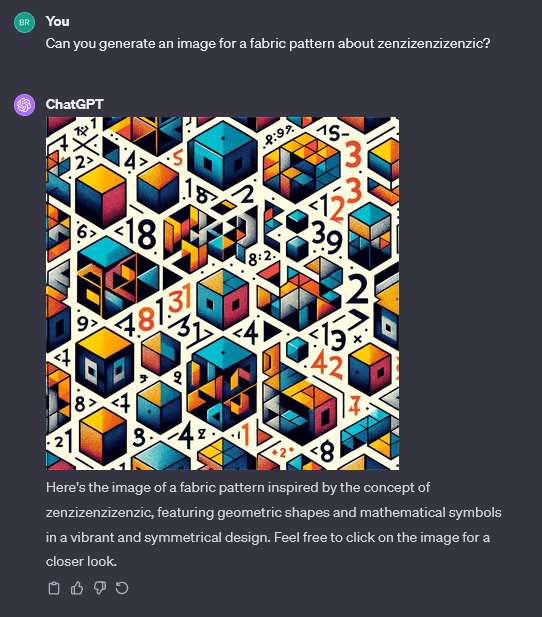

It didn’t make the cut for the “see, hear, and speak” tagline, but thanks to its DALL-E integration, ChatGPT can now generate images. You can prompt ChatGPT to create images in any interaction mode—typing, voice recording, or during a conversation. All you have to do is ask it to generate an image for you; you don't need to specify a prompt. Instead, it’ll generate based on the context of the conversation.

All this is hidden from you. When it generates an image, all it tells you is "generating image. In order to see the prompt, you need to explicitly ask for it.

But here's a twist: The AI's self-generated prompts can be quite elaborate, often too complex for DALL-E to interpret accurately. And if you try giving it a specific prompt, it tends to go its own way, using something different. Despite several tries, I couldn’t get it to generate an image with the exact prompt I specified.

A quirk to note: ChatGPT can't “see” the images it creates. If you want it to analyze an image it's made, you'll have to re-upload it into the prompt window.

It’s a pretty easy way to generate images, but if you plan to do a lot of image generation, my suggestion is to develop your own image prompting skills in a more controlled environment. Doing it here is still handy — just know that ChatGPT may ignore your meticulously crafted prompt in favor of a more, let’s say, ‘free-spirited’ approach.

Honestly, it’s a bit of a wildcard — one time it nailed a perfect illustration of a flaming ukulele, another time it tried to paint my cat as a floating toaster. But that’s half the fun, right? Once in a while, you’ll stumble upon a gem that nails exactly what you want (or something weirder that you didn’t know you needed).

|

Conclusion

While it's still early, these features show a lot of promise. Multimodal mastery is going to be an essential skill of any active AI user, so experiment with these new features to see how well they fit into your workflow. Who knows, you could find whole new ways of working with the tools.

Frequently asked questions

Does ChatGPT have multimodal capabilities?

Yes. ChatGPT can interpret images, transcribe voice prompts, and also generate spoken responses. These abilities let you upload pictures, talk to ChatGPT, and get voice replies—all in a single conversation.

How do I enable ChatGPT’s new voice and image features?

On mobile, open Settings, choose New Features, and enable voice conversations. Tap the headphone or photo icon to start talking or sharing images. On desktop, you can upload images directly in the chat box.

What types of content can ChatGPT interpret visually?

It can analyze most common images—like photos, screenshots, and posters. ChatGPT can answer questions about the content or text within an image, and even help you troubleshoot items pictured.

Are these new multimodal features available on the free plan?

They’re rolling out first to ChatGPT Plus and Enterprise subscribers. Free-plan users may get these features later, but there’s no official date yet.

Does ChatGPT use multiple models to handle voice and image requests?

Yes. ChatGPT uses a core language model (GPT‑4) along with specialized models for speech recognition and text-to-speech. These work together so it can understand and respond to different inputs in real time.

Privacy and Ethical Considerations

Keep in mind that ChatGPT won’t identify or comment on real people in images, and for good reason — it ensures respect for individual privacy. Tools like AI-generated voice also come with the potential for misuse, so caution and transparency are key. Be sure to double-check any critical information ChatGPT provides, especially when it’s interpreting images or audio. By using these features responsibly, you’ll get all the advantages of multimodal AI while minimizing the risk of unintentional harm.